Unleashing the Power of GenAI for Citizen Data Scientists: Building ZAnalyzer.ai

October 24, 2024

Art Morales, Ph.D

Generative AI has made significant strides in recent years, not just in creating art or generating text, but also in empowering citizen data scientists—individuals who have a knack for data but might lack deep technical skills. With tools like ChatGPT, Sonnet, DBRx, and Gemini, those without coding expertise can now bring their ideas to life, testing concepts and building functional prototypes without having to focus too much on basic tasks that a dedicated developer can easily do.

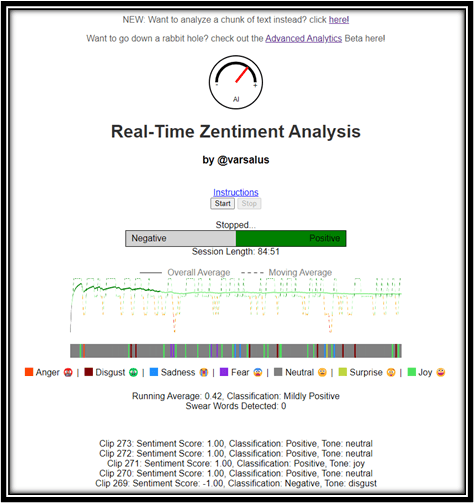

I recently finished a side project (ZAnalyzer.ai, a free tool designed to track the sentiment of conversations in real-time, see screenshot below!), and used ChatGPT and Sonnet heavily to help me with some of the deep technical tasks that would have taken me some time to figure out. Thus, I thought this was a good showcase of how GenAI can help transform a simple idea into a working application in an accelerated and empowered way.

Figure 1: Screenshot of the Zanalyzer tool after listening to a conversation for ~85 minutes. The line chart shows the cumulative average (solid line) and windowed average (dashed line) of the conversation as analyzed in small chunks of audio. For each chunk of audio, the system classifies the text as positive or negative and the latest result is shown in the Negative: Positive Bar (green in this case). The extracted audio is also classified for Tone using a different model and the bar below the line chart shows the tone at each of the chunks chronologically. A third model is used to identify any swear words and that counter is shown below. Finally, the sentiment and tone for the last 5 chunks are shown at the bottom of the screen.

The Birth of an Idea: From Conversation to Concept

The inspiration for ZAnalyzer.ai came from a casual chat with an old friend. We observed that some conversations, particularly those with certain individuals, often skewed negatively. Imagine a tool that could monitor the sentiment of a discussion in real-time, alerting you when the tone was turning too negative or suggesting a change in subject to keep things balanced. This concept, useful in scenarios ranging from social interactions to couple's therapy, sparked my interest in building a simple application to track conversational sentiment.

Equipped with just this idea, I dictated the basic concept into the ChatGPT app on my phone while driving back from the restaurant. By the time I got home, I had a detailed design brief outlining the potential functionality of the tool. This initial step demonstrated the power of GenAI in rapidly translating vague concepts into actionable plans.

From Design Brief to Working Prototype: GenAI as a Coding Partner

Armed with the design brief, I fired up Visual Studio to begin experimenting with the implementation. I will admit though, that there is nothing more intimidating to me than an empty visual studio page. Thanks to the ability to leverage ChatGPT as my coding partner, all was not lost. Although I had a general understanding of what I wanted to build, I wasn't familiar with the specific libraries or technical nuances needed to get started. ChatGPT filled this gap by generating the initial code snippets, which validated that the core idea was feasible.

As I’ve done a few times in the past, it was exciting to code alongside ChatGPT. I was relieved to find out that it had improved since the last time I used it to code a solo project. It didn’t get as tired or hallucinate badly as it used to and rarely failed, although it did offer unhelpful solutions every now and then… but I expected them and had a workflow that worked for me. It even taught me how to do a logo writing SVG code directly.

These elements came together in the early Proof-of-concept. Thanks largely to the help provided by ChatGPT, I could focus on the broader vision without getting bogged down by the intricacies of the code. In the end, I had a working MVP that I could test and expand, and was even able to publish it at https://zanalyzer.ai. Although limited at first (and still), the tool used Wit.ai for speech recognition to convert spoken language into text. The text was then analyzed for sentiment using transformer models like distilbert-base-uncased-finetuned-sst-2-english from Hugging Face, which are particularly adept at understanding context and nuances in language. It then displayed the sentiment using some simple visuals. All in all, it was a complete solution that proved the vision and gave me a platform to continue building.

Overcoming Technical Hurdles with GenAI and Determination

The journey wasn't without its challenges. One of the most significant hurdles was deploying the PoC on a server. While the application worked smoothly on my local machine, the hosting provider (pythonanywhere.com) did not support certain features, such as threads required by Hugging Face transformers. There were no errors in logs and ChatGPTs answer was to keep adding logging. The issue was that the problem wasn't documented anywhere I could find, leading to a frustrating period of trial and error. It wasn't until a lucky Google search (yes people still use Google search!) revealed the solution (one line of code to turn threads off) that I managed to overcome this obstacle.

In another case, I kept noticing that the speech recognition didn’t work that well. Growing up as a bilingual speaker in the Dragon Naturally Speaking era with simple algorithms, my expectations were low and thus I assumed it was a normal behavior. I designed the solution around it by not promising 100% accuracy and just using samples of the conversation. But this problem kept coming up, and it anecdotally seemed worse when using a phone. I read up on microphones and mobile audio libraries in Safari and went down a deep rabbit hole changing the audio recorders and formats… this took a while and it was frustrating. Too many variables to change, too much inconsistency. I took a few days off to try the new season of Diablo IV (among other less fun things) and let it sit for a bit. With a fresh mind, I dug into the different approaches to speech recognition. ChatGPT had initially recommended using the Google API for speech recognition and it generally worked, but I figured I should dig deeper there. I found a blog comparing the different libraries and there was a note about having to get an API key for Google... But it was working without it… there was also a note saying that google had an API call hard limit that was not extendable. After a bit of testing, it appeared that the default call (without an individual API key) used a common key and that depending on usage, Google was just failing silently (the danger of deep libraries). In any case, switching to wit.ai solved the issue once I got my own key and things miraculously worked across all platforms.

The most frustrating issue had nothing to do with code generation. After developing the tool and testing it on the desktop, everything was working fine but when I had a friend test it on her phone, she wasn’t getting good results. It appeared to work but the results didn’t make sense. After chasing my tail for a while I noticed that the recognized text recorded on Windows was much longer than the one recognized when recorded on the iPhone. Deeper testing showed that the conversion from MP4 to WAV resulted in a 1-second clip instead of the expected 10-second clip. I spent hours playing with options for FFmpeg and even wrote a program to systematically test every function to see if anything would make a difference. In a moment of clarity, I figured why not upload the file pairs to ChatGPT and ask it to see if it saw a difference, but the answers were always inconclusive and circular. I then asked ChatGPT to write code to test the conversion itself and surprisingly the results in that environment showed no issues. I finally traced the issue down to the fact that the default FFmpeg package in PythonAnywhere is a much older version and that version apparently has issues when reading MP4 recording in IOS, but the newer versions don’t. It was then an easy fix to get the latest source for FFmpeg compile it in the production environment and then change the path to the newer FFmpeg… easy, right? At least the countless days and nights I spent it grad school compiling and installing Linux from source came in handy.

These challenges underscore a critical point: while GenAI can streamline much of the development process, a combination of technical know-how, perseverance, creativity, and targeted problem-solving is still essential, especially when facing unexpected technical issues. Also, being stubborn and not giving up easily is a major advantage.

Expanding the MVP: Asking More Interesting Questions

Once the MVP was up and running, the real fun began. With the foundational elements in place, I could start asking more interesting questions. I wanted to explore the qualities of the audio and I was quickly able to add the analysis of features such as MFCC, pitch, loudness, spectral centroid, and spectral bandwidth are extracted using Python libraries like librosa, offering deeper insights into the emotional tone of conversations. What was cool is that I didn’t initially know which library could help with that, and ChatGPT suggested a few that I could test and validate, saving me tons of time and frustration. The process gave me the ability to explore additional features that I wouldn't have attempted alone. For instance, I added responsive graphics to visualize sentiment scores over time and incorporated asynchronous communication, significantly enhancing the user experience. This iterative process, supported by GenAI, allowed me to build out the tool's capabilities without needing to become an expert in every language or framework involved. To be fair,I don’t yet know what to do with the added data, but I can’t wait to explore the aural properties of speech and how they correlate with the sentiment (especially once I separate speakers through diarization). Obviously, there is tons of research out there on the topic, but the point is that GenAI can enable the exploration of ideas and that can only lead to good things.

As I keep adding features, the tool becomes more complete and complex. ZAnalyzer.ai can now display the specific text segments associated with sentiment scores, connecting audio directly with spoken content and it also uses a completely different model to classify the tone of the conversation. This granularity enables users to understand how certain phrases or intonations impact overall sentiment, making the tool valuable for applications in customer service, coaching, and more. On the other hand, I really wanted to show the text on hover in the graphs but couldn’t get it to work well … that’s an improvement for another day. One of these days I hope to separate speakers, but that’s harder to do in real-time and will require some changes that I don’t have the bandwidth to do yet. I’ll leave that for the next time I’m overcaffeinated at 2 AM and I’m done with my day job tasks.

Empowering Citizen Data Scientists: The Broader Implications

The story of ZAnalyzer.ai highlights a broader trend: generative AI is democratizing access to technology. By reducing the barriers to entry, these tools empower anyone with an idea to experiment and build without needing to know all the technical details upfront. While I may never be a top-tier Python or JavaScript developer, GenAI has allowed me to create something meaningful and functional that serves a real purpose.

The potential applications are vast, extending beyond sentiment tracking to include areas like communication coaching, AI interactions, market research, and more. With the ability to quickly prototype and iterate on ideas, citizen data scientists can focus on solving problems rather than getting bogged down by the complexities of coding.

Conclusion: Turning Ideas into Reality with GenAI

Building ZAnalyzer.ai was a rewarding experience that showed me firsthand how generative AI can turn a simple conversation into a fully realized tool. By acting as a coding partner, GenAI allowed me to bypass many of the traditional hurdles associated with software development, enabling me to focus on the problem rather than the implementation.

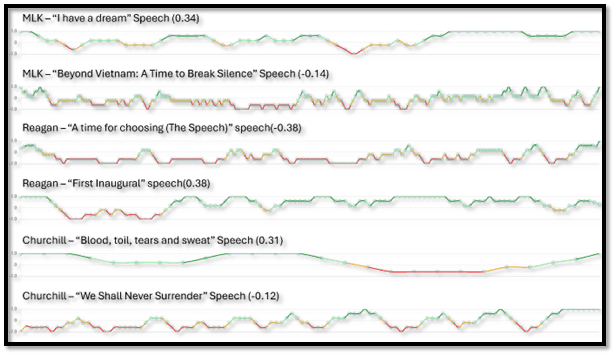

I have since continued to expand the tool to analyze the sentiment in text (cool to see how different speakers speak in different settings) and even added a database backend and session logging to enable better data explorations later. I have lots of ideas of what to do next and the great thing is that nothing is preventing me from trying them.

Figure 2: Composite screenshot of the Zanalyzer tool after analyzing some famous speeches. The text is analyzed and divided into small segments and the sentiment is determined for each one (Positive or Negative) and the windowed average is shown.

If you have an idea you've been sitting on, now is the time to explore it. With tools like ChatGPT and others, the path from concept to creation has never been more accessible. Whether you're looking to build a prototype, test a hypothesis, or just tinker with a new concept, generative AI can be the catalyst that brings your ideas to life.

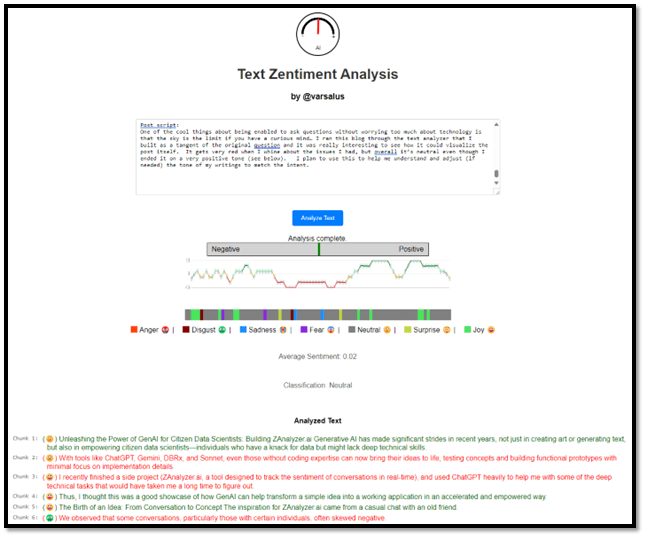

Postscript:

One of the cool things about being enabled to ask questions without worrying too much about technology is that the sky is the limit if you have a curious mind… I ran this blog through the text analyzer version that was a byproduct of the original question and it was really interesting to see how it could visualize the post itself. It gets very red when I whine about the issues I had, but overall it’s neutral even though I ended it in a very positive tone (see below). I plan to use this to help me understand and adjust (if needed) the tone of my writing to match the intent.

Figure 3: Screenshot of the latest version of the text analyzer that now includes the tone for each sentence and the analysis of the whole text.